Today, we stand at a crucial crossroads in the history of humanity, a juncture that marks a before and after in our evolution, not just technologically, but also morally and socially. Artificial intelligence, this colossus striding among us, is at the center of this crossroads. It’s a topic that forces us to reflect not only on what we can do, but also on what we should do.

Let’s take a moment to reflect on humanity’s journey, on how our history has been marked by revolutionary inventions, from the discovery of fire to the creation of the wheel. These advancements were not just technical, but represented evolutionary leaps in how we interact with the world around us. Today, AI represents an equally significant evolutionary leap, but with an unprecedented complexity. It’s a force with the power to redefine the very foundations of our society, our economy, our culture, and even our personal intimacy.

However, as with every major historical turning point, there are pitfalls. AI, in its essence, is a neutral tool, but in human hands, it can transform into something very different. It can become a means to amplify our best qualities, like creativity, intelligence, and empathy. At the same time, it can also become a tool for exercising unprecedented control, a means to limit and direct human thought and creativity along predefined and restrictive paths.

When we ponder the ethical control of Artificial Intelligence, we face a complex paradox deeply rooted in human history, particularly in the era of the birth of the printing press. The press, invented by Gutenberg in the 15th century, was one of the first technologies to bear the weight of ethical control and censorship. Just like AI today, the press opened doors to a sea of possibilities, democratizing access to information and knowledge. However, it soon became an object of suspicion and censorship by institutions and authorities, fearful of its potential to spread revolutionary and subversive ideas.

The ethical control of AI, if poorly managed, risks following the same path. While it’s imperative to have an ethical framework to govern AI’s use, to prevent abuses and dangers from its irresponsible employment, the crucial question emerges: who establishes these ethical parameters? And based on what criteria?

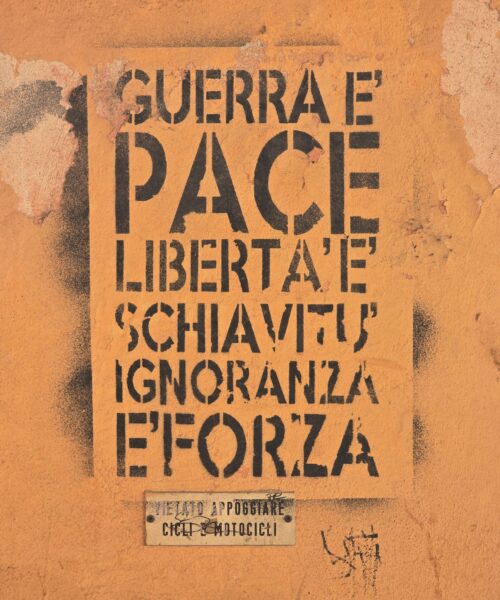

Just as authorities in the past attempted to control the flow of information and ideas through book censorship, there’s a risk that AI ethics could transform into a new tool of censorship. This modern censorship might not burn books, but could limit, filter, or direct artificial intelligence in ways that suppress diversity, creativity, and freedom of expression. In this scenario, AI would no longer be a tool for liberation and innovation, but become a means to impose a uniform and singular worldview.

The danger is that, in the name of ethics, we end up stifling the very diversity and freedom of thought that are fundamental in any open and democratic society. In a world increasingly driven by AI, it’s vital that ethics do not become a pretext for oppressive control, but rather a balance that ensures the responsible and beneficial use of this technology.

The debate on the ethical control of AI is not just about defining rules and limits, but is a broader discourse on freedom, diversity, and innovation. We must learn from history’s lessons and ensure that AI, just like the printing press in the past, becomes a means to expand human horizons, not to restrict them.

Continuing this reflection, we can’t ignore the complex relationship between AI and creative freedom. AI has the potential to open new frontiers in art, writing, music, and all forms of human expression. However, if its capabilities are constrained by rigid and homogenizing ethics, we might find ourselves in a world where every creative work is valued not for its intrinsic worth, but for its adherence to predefined guidelines. A world, therefore, where art loses its power to challenge, provoke, and explore new horizons.

The issue, therefore, is not just whether AI can or cannot do something, but rather if its actions should be limited and, if so, how and to what extent. This is a question that touches the very foundations of our society: the freedom of thought, the freedom of expression, the freedom to explore new ideas, even those uncomfortable or controversial. In a world increasingly driven by AI, we must ensure that these freedoms are not only preserved but are amplified and celebrated.

It’s undeniable that AI poses fundamental questions about who we are and who we want to be. It’s not just a question of technology, but a question of human choices, values, and vision. How we manage this extraordinary technology will define the future not only of our societies but also of our very humanity.

Continuing our reflection, we can’t ignore the vital importance of continuous learning in an AI-dominated era. This concept is often touted as a solution to adapt to rapid changes in the job market. However, we must be careful not to reduce learning to a mere mechanical response to economic needs. Continuous learning should be a journey to develop critical thinking, the ability to question, and to understand the deeper implications of the technologies we adopt. It shouldn’t be just a way to adapt, but also a way to innovate, to imagine new possibilities, to actively shape the world we live in.

But there’s a further level of complexity in this discourse: the power of AI to shape reality itself. In the world of AI, reality can be manipulated, filtered, even reinvented. This power, if used without adequate ethical and critical control, can lead to a distortion of our very sense of reality. We might find ourselves in a world where our perceptions, our beliefs, even our memories, are influenced or determined by algorithms we don’t fully understand and can’t control.

Finally, we must address the issue of responsibility. Who is accountable when AI makes wrong decisions? When its actions cause harm? This is not just a legal or technical question, but a deeply ethical one. In a world where machines make increasingly important decisions, the question of human responsibility becomes more elusive. That’s why it’s essential to develop a clear and robust ethical and legal framework that clearly defines responsibility in case of errors or abuses.

We can well affirm that AI presents us with unprecedented challenges, but also extraordinary opportunities. How we manage this technology, how we integrate it into our society and our lives, will define our future. We must ensure that AI is an amplifier of our best qualities – our creativity, our intelligence, our empathy – and not a tool for control or suppression. The road ahead is complex and full of challenges, but also rich with possibilities. It’s up to us to choose the path to follow.